"We're So Back" - the Analytics Engineering Angle

Sanity Check • No. 012

Hey y’all - here’s what’s new this week:

🦆 I got access to MotherDuck. If you want access too, I have 5 invites. LMK.

🎪 My house has termites! So the house gets a tent, and I get a quick stay-cation.

🧩 My dad invented a puzzle game, put 100 of them into a book, and published it on Amazon. If you enjoy Sudokus, you’ll enjoy Squarely. Check it out!

FRESH FEATURE

We’re So Back: Five Exciting Emerging AE Capabilities

July ended like it started - with fireworks 🎆

Well, at least it felt like fireworks. Breakthrough announcements kept bursting forth. Llama 2 was released. Inflation decreased. Earnings popped. Then news of room temperature superconductors dropped. All the buzz had people saying, “We’re so back!”

That same excitement hasn’t been there for the analytics community lately. The industry has been in stasis for the better part of a year, but I’m sensing a reawakening. Here are five things I think will bring analytics engineering to its own “we’re so back” moment:

The Ultimate Rubber Duck

Modular Analytics

dbt Unchained

BI-as-Code

Paved Path to Prod

Allow me to expand…

The Ultimate Rubber Duck

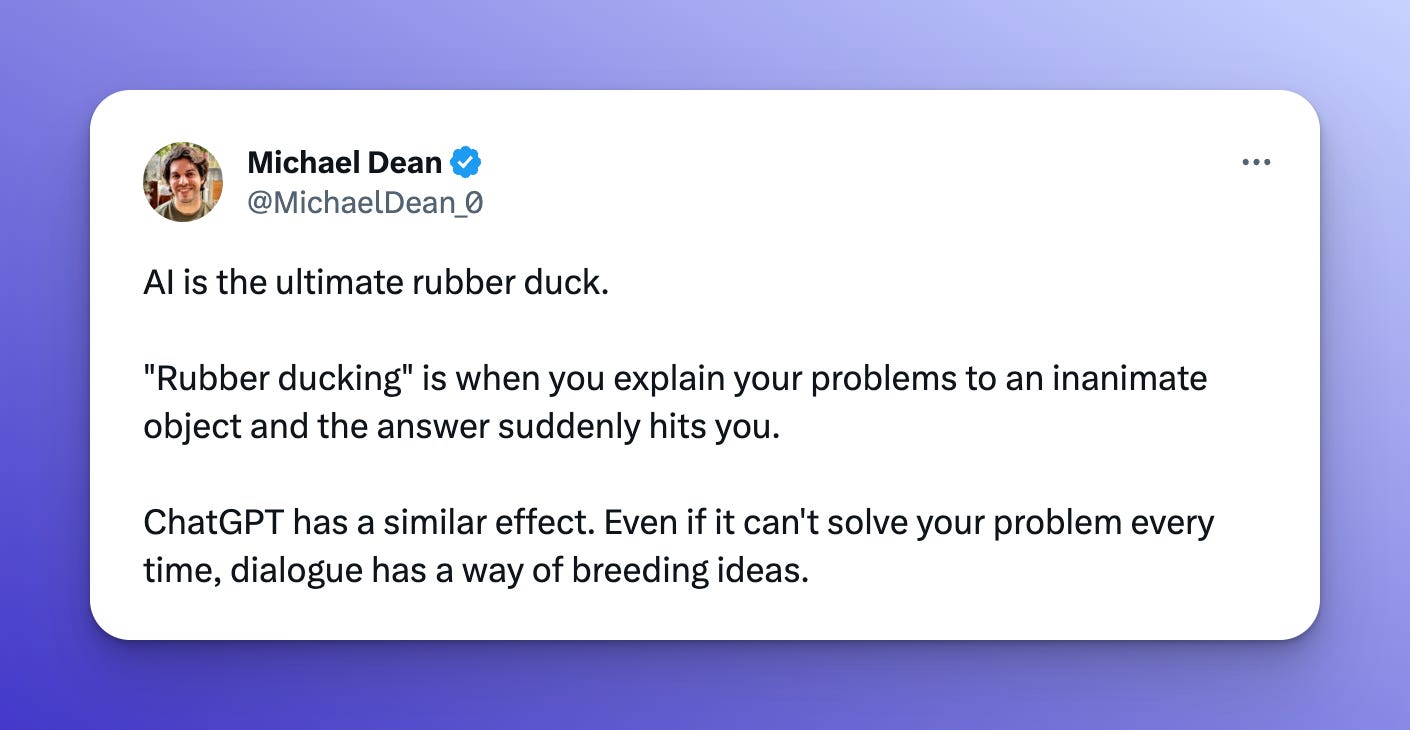

Michael Dean nailed this idea, saying:

My choice of LLM-enabled rubber duckies has been GitHub Copilot Chat. The chat version of Copilot is still in beta, but I was let in without a significant wait.

The baseline Copilot is more like a fancy auto-complete. While ChatGPT is best-in-class, I quickly grew tired of context-switching between browser and editor. Copilot Chat sits in that Goldilocks Zone of helpful without breaking your flow.

Modular Analytics

Analytics is a bundle. It is tightly coupled proprietary data with internal analyses. This means our work ends up shrouded in mystery. We can sort of describe it, but we cannot show it.

This coupling hurts our profession! It’s hard to attract new talent. Everyone solves the same problems in isolation. Skills don’t transfer between jobs because that’s “not how we think about it.” It doesn’t have to be this way.

That’s why I’m bullish on modular analytics. If it becomes easy enough to generate fake realistic datasets, that opens the door for transparent analyses. That’d be great for educational material, data practitioner portfolios, and maybe even an analysis marketplace.

It is possible today. With dbt Python models, we can leverage packages like Faker and Factory Boy. Both come pre-bundled in Snowflake’s conda packages. While this style of implementation is still too clunky for widespread adoption, I believe it is only a matter of time to sand down the rough edges.

dbt Unchained

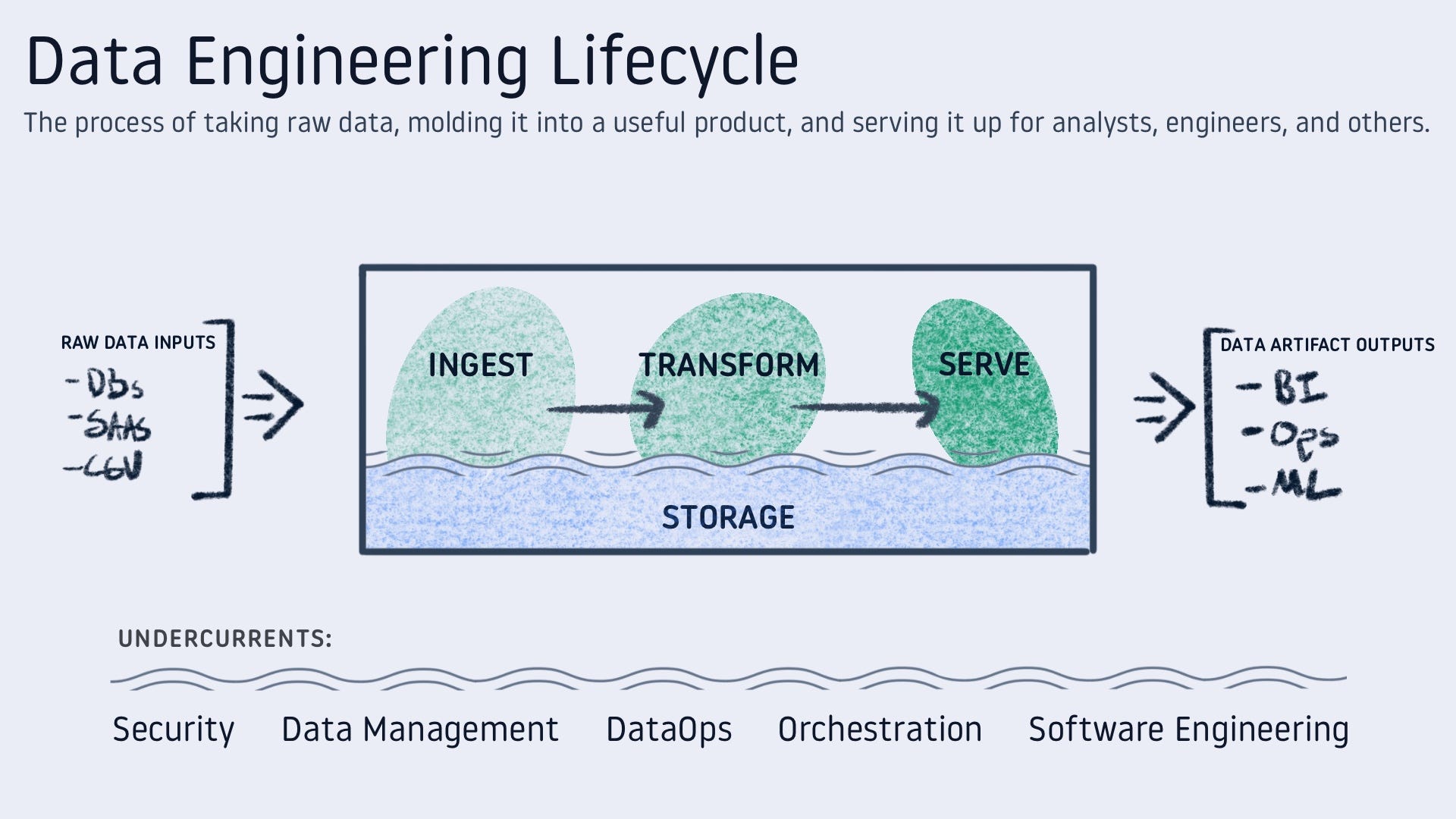

It has been said before and I’ll say it again - dbt puts the T in ELT. Framing dbt in the data engineering lifecycle — Ingest→Transform→Serve — dbt sits firmly in the middle.

What if dbt could do more? Maybe even things that would make dbt Labs uncomfortable. That is actually the mission of Josh Wills’ dbt-duckdb adapter!

The main value add that is only available in this flavor of dbt is the ability to expand into the Ingest and Serve areas of the lifecycle.

dbt-duckdb can read from and write to external sources. In practice, this looks like reading a glob of parquet files in an S3 data lake, then writing the transformed output to a new bucket. The same can be done with Excel and GSheets. I was even able to coerce dbt into pulling data from a REST API. In a traditional MDS deployment, you would need at least four vendors involved to get the job done - I only used my laptop for free.

BI-as-Code

No-Code, Low-Code forget all that and give me X-as-Code.

GUI-based technical workflows have always frustrated me. They are difficult to update and easy to run into the guardrails. That’s why I prefer the creative freedom of code.

Once your work has been codified, you get a whole suite of extra benefits:

Version Control

Deployment Environments

LLM Rubber Ducks…

… there’s more, but need I say more?

Cloud Engineers got Infrastructure-as-Code. DevOps Engineers get Continuous Integration-as-Code. SecOps has Compliance-as-Code. Now, the Analytics Engineers get to join the fun with BI-as-Code.

Looker’s LookML started pushing in this direction, but the tool to keep your eye on in the space is Evidence.dev. Markdown provides the context and report structure. SQL powers the charts and reusability.

To dig deeper, I suggest reading Archie’s Guide to Running Outstanding Business Reviews.

Paved Path to Prod

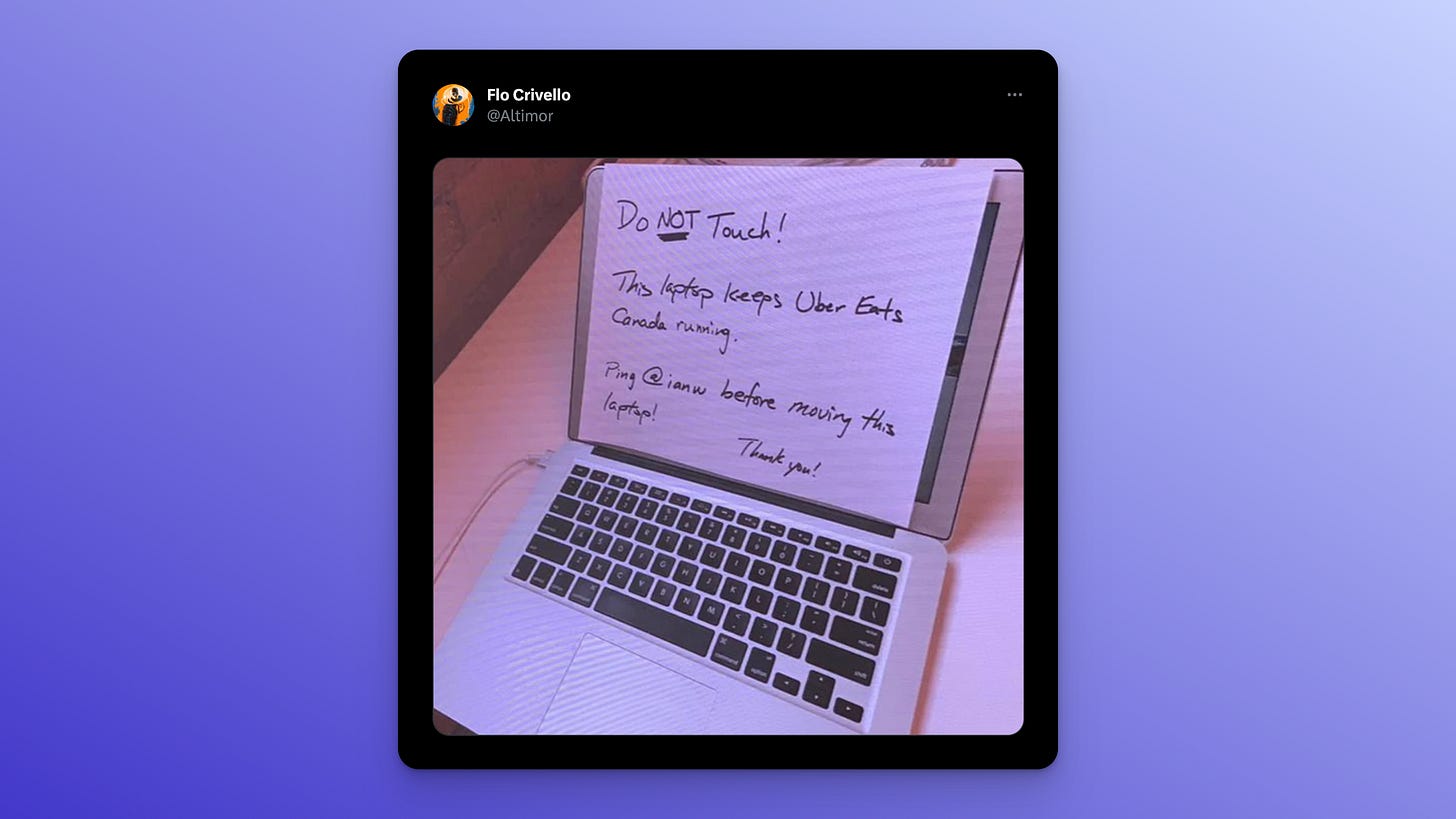

The previous four capabilities could all be done locally. At a micro level, it’s great to get started quickly. At a macro level, being able to set up a professional analytics stack for free helps democratize the practice.

That’s all well and good until your laptop becomes critical infrastructure.

To avoid this fate, we need to lift our work to a production environment. Often, that’s easier said than done. The path to production can be an arduous one. A real grind of integrations and configurations. When you’re using DuckDB, that path has been paved smooth for you. MotherDuck can lift your work to production with a simple change of the connection string - `main.duckdb`→`md:main.duckdb`.

—

The analytics industry may no longer be vibing like 2019. If you take a closer look, there are some exciting projects coming together. We’ll be back!

What are the exciting projects you’re keeping an eye on?

CURATED COLUMNS

A few interesting articles, podcasts, or websites I recently came across

How Analytics Drives Operational Excellence

Ergest’s Substack is a weekly read. This one struck a chord with me. He set a macro goal - excellence through analytics. Then breaks the goal down into a tangible set of necessary conditions. I could spot missing conditions from prior analytics initiatives that fell short, but I also found it encouraging as a tool to review a business’ analytics readiness.

Fundamentals of Data Engineering

In sports, new players and pros drill the same fundamentals. In data engineering, aspiring and seasoned practitioners can add this book to their training routine. Always drill the fundamentals.

I appreciate that this book is not prescriptive in its approach to data engineering. Instead, the authors focus on “The Data Engineering Lifecycle,” with additional “undercurrents.” This clicked with me for the same reason as Ergest’s goal tree - good framing. The lifecycle helps keep the bigger picture while tackling complexity in practice.

DATA DOODLES

Documenting analytics jargon, visually