Hey y’all,

Here’s what’s new:

🌴 My mom is officially retired! Congrats Mom! I flew to Kentucky to surprise her but quickly got back to reality. Someone’s gotta work around here 🙂

🌮 I love Mexican food - and there is no better place for it than Guadalajara in my hometown. I was happy to get a fix while I was home.

♻️ After five years of hard use, my monitor went kaput. I’m unsure of what to replace it with. Any strong recommendations?

FRESH FEATURE

The Good, The Bad, and The Ugly: What to make of the recent dbt updates

Lately, dbt has put me on an emotional rollercoaster.

YAY - dbt Labs invests in the metrics layer with Transform

BOO - the dbt_metrics package is deprecated

YAY - dbt embraces the data mesh with multi-project support

BOO - multi-project collaboration is an Enterprise-only feature

YAY - dbt Core 1.6 has been released

BOO - dbt Cloud is moving to usage-based pricing

This week I took a step back to try to make sense of all the changes. Let’s get the bad news out of the way first.

The Bad: Pricing Changes

Last week dbt Labs announced their second price increase for dbt Cloud in less than a year.

The first change doubled their price per seat ($50 to $100 / month).

The latest change adds a charge for usage above a baseline ($0.01 per successful model beyond 15k).

Let’s unpack that some more. What counts as a “successful model build”? It is:

not usage within the dbt Cloud IDE

not usage within a local dev environment

not seeds, tests, or snapshots

not ephemeral models

not a model that errors

not any canceled model downstream of an error

a successful table, view, or incremental materialization

So keep an eye on your production and CI dbt run results.

Pricing changes are never fun, but dbt Labs has provided a runway for the change. Existing accounts have a year to plan before it takes effect. New accounts have the 15k baseline cushion to grow into. Once my initial reaction faded, I realized this was not so bad. This is manageable news.

If you are still concerned about the pricing change, the good news is you are in control.

The Good: Core Control

Up to this point, I have been reckless with my model materializations. For my main production build, I would run a bare dbt run - no node selection whatsoever. In a usage-based world, this would be a good way to rack up quite the tab!

I must take back control. There are two ways I see to cut down on superfluous materializations.

Avoid resetting view definitions that have not changed.

Check if new data is available from the source before updating tables.

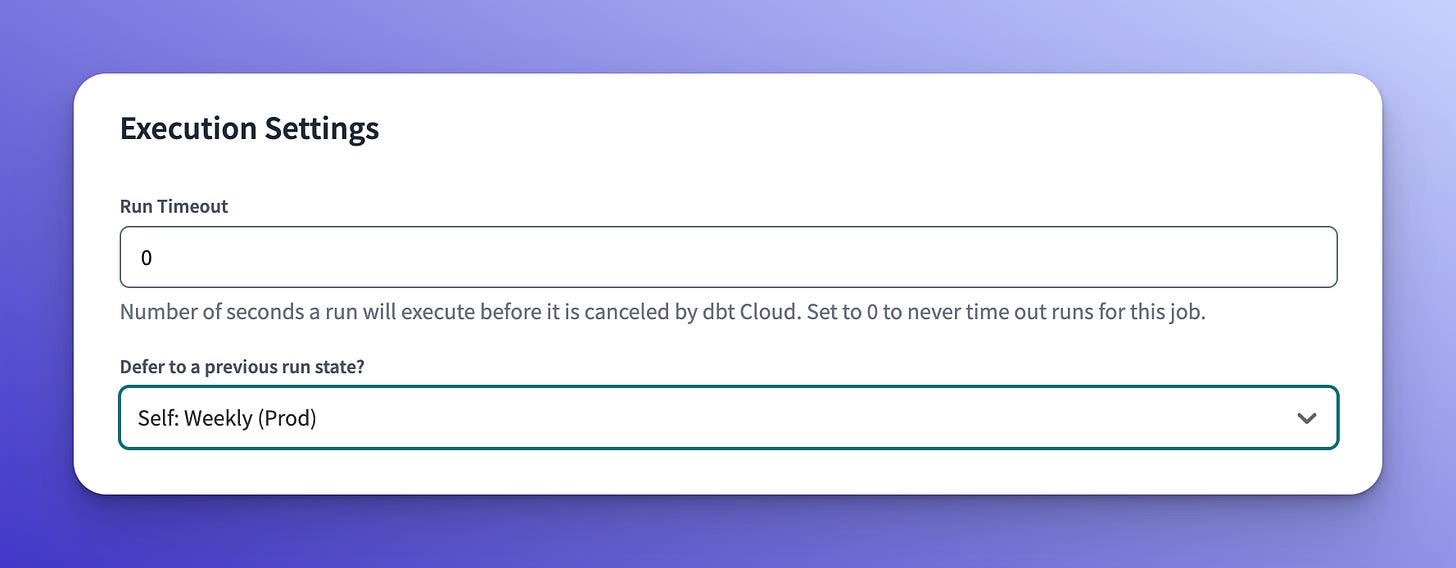

The first situation is easy enough to address. The Slim CI workflow can be pushed into production. A dbt Cloud job’s execution settings can be updated to “defer to self.”

With the production job now running in deferred mode, your dbt command could look something like this:

dbt run -s state:modified config.materialized:table config.materialized:incremental

With this command, tables and incremental models will continue to build on a regular schedule, but views will only update when the underlying query has been changed - like when a PR is merged.

The second situation - only materializing tables when new source data is available - does not have an elegant solution, but a simple one. If you understand the refresh cadence of the sources, you are able to adjust the schedule of any dbt to job to run only once after each load.

It would be possible to be fancier with your dbt Cloud schedule - like having Fivetran kicking off dbt Cloud jobs on completion of a sync - but if you’re meeting your data availability SLAs there is no need to overcomplicate the matter.

With some planning and cleverness, you’ll be able to stretch the 15k model buffer further. It will likely bring down your cloud data warehouse spending some too. Win-Win!

The Ugly: Managing State

By default, dbt is designed to be stateless and idempotent. This serves dbt users well when getting started. The cracks in this idea start to show as more collaborators get involved, or you want to be targeted with your usage.

This is where dbt’s Slim CI was introduced. It compares a mainfest.json file from a prior run to the manifest of the current run. In this comparison, dbt is able to pick out what has changed.

Is there a new model?

Did a materialization strategy change?

Was the underlying query updated?

With those differences identified, dbt is able to be selective about what needs to be run. For pull requests, you can isolate running only the models that are downstream of updated code.

This all sounds nice, in theory. In practice, it can produce some gnarly errors.

Several times I have been stuck in a bad state. I end up ripping out the deferral mode, doing a full refresh to get a clean state, and then turning the deferral back on for the next time. It is a band-aid approach and bad practice on my part.

Being able to understand and leverage the capabilities behind the --state flag are becoming too important to ignore. New commands — like dbt clone — the need for targeted builds in prod & CI, and speeding up development cycles as the number of models grows all rely on managing state.

It would be time well spent to play around with state configuration. Hopefully, soon, these rough ugly edges will be smoothed over.

Until the Next Ride

Some change is exciting. Other changes can be frustrating. Overall, I take these changes as a positive sign.

I remember the first dbt project I deployed. The director of analytics said, “I do not know what we are paying for dbt, but whatever the amount, it is worth it.” Even with the usage-based pricing, I believe that sentiment to still be true.

CURATED COLUMNS

A few interesting articles, podcasts, or websites I recently came across

This week I’m treating this segment like my citations for all the announcements covered in the Fresh Feature.